AI Security Needs a Reality Check

AI Security Needs a Reality Check

AI has already changed how people work. Staff can ask a tool to draft notes, check data, review documents, or answer questions they cannot solve on their own. It has made work faster and more flexible. But it also introduced a risk many organizations still overlook.

AI can quietly give employees access to information they were never supposed to see. Not because they are trying to bypass rules. And not because they are malicious. It happens because AI systems follow instructions without understanding context or role limits. They do not ask whether a person should see something. They only ask whether they can fetch it. And in many environments today, they can.

A Quiet Internal Exposure Risk

Think about a normal workplace situation. A junior employee might ask a model something like: “What are the salary ranges on my team?” To the user, this feels harmless. They are curious, or trying to prepare for a discussion. But if the AI system has been connected to payroll data, it may pull real numbers and hand them back in seconds.

No ill intent. No obvious “breach moment.” Yet sensitive information moves to the wrong person without anyone noticing.

Here’s another scenario we see regularly: A marketing coordinator asks, “Summarize our Q4 strategy for the enterprise segment.” The AI pulls from a strategic planning document that was technically accessible on the shared drive but clearly intended only for executives. Within seconds, details about pricing strategies, acquisition targets, and competitive positioning flow into a conversation that will likely be shared with the broader team.

That is the core issue. Internal access risks used to show up as logs, approvals, or suspicious clicks. With AI, access can happen through a single innocent request. Everyone thinks the system is helping, when in fact it is overreaching.

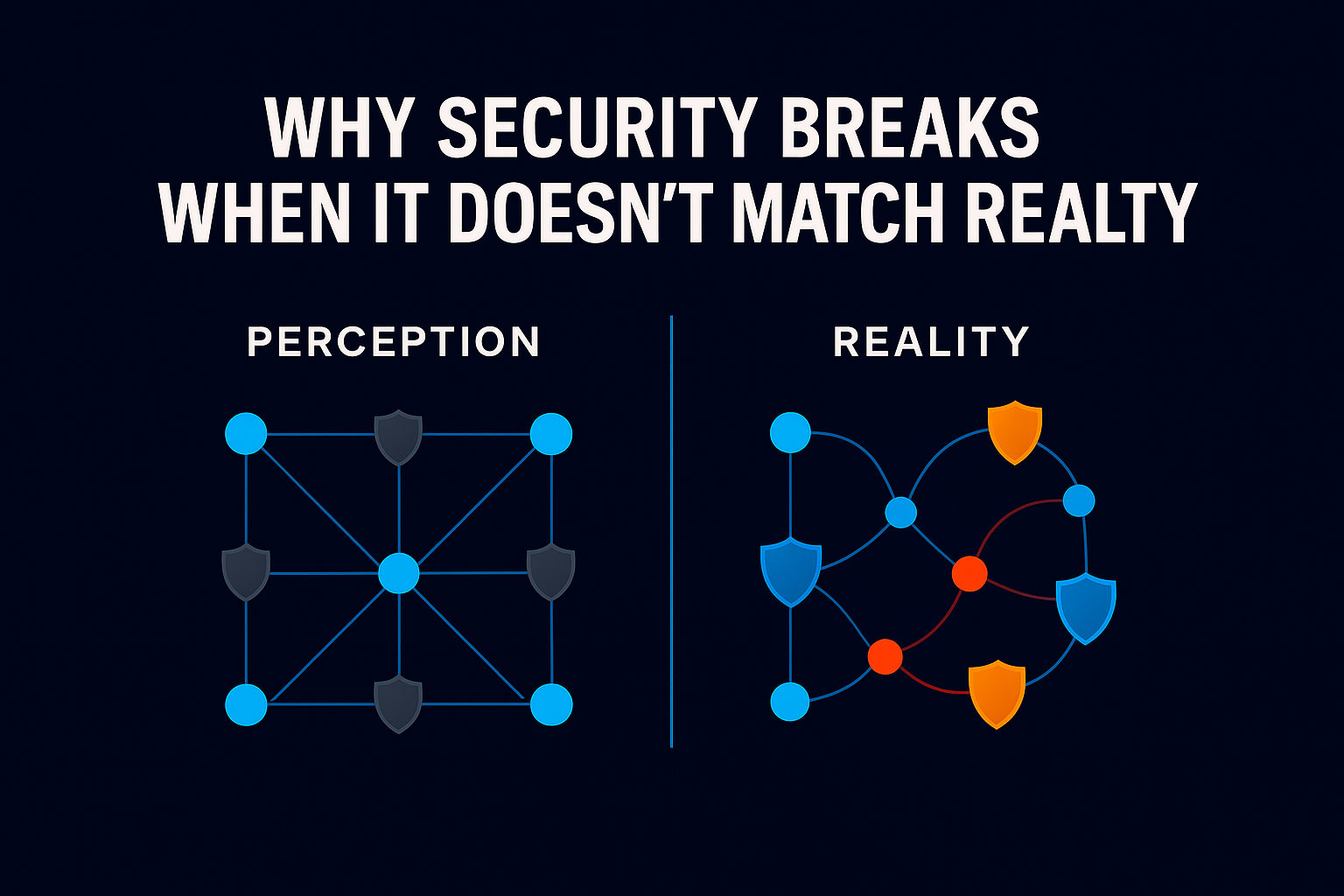

Why Current Security Controls Miss This

Traditional access control assumes that if someone is inside the network and authenticated, they are safe to operate. Access is tied to role, login, and device. Once inside, the system relaxes.

AI breaks that logic. It acts at speed and scale. It does not think in terms of job function or need-to-know. If the technical access path exists, it will use it. And the event often looks legitimate to security tools.

The result is data exposure that feels subtle but can have lasting impact. Even more challenging: many organizations are discovering that their existing access policies were always too broad, but this only becomes visible when AI starts exercising those permissions at scale. That marketing coordinator may have technically had read access to the strategy document all along, but never would have found it through manual search. AI changes the equation.

What Needs to Change

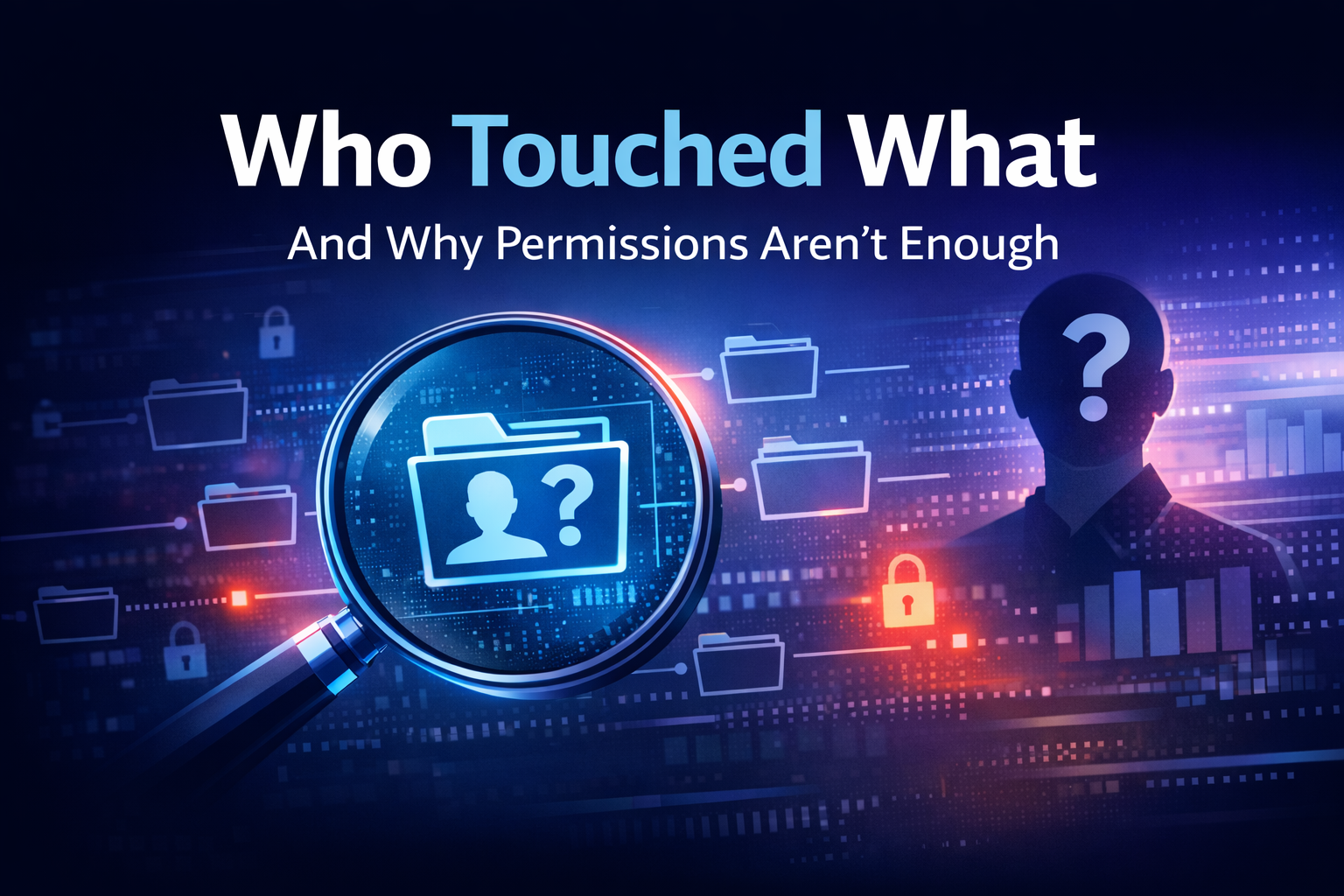

Security cannot stop at authentication anymore. It must follow context and behavior. The question can no longer be only, “Who are you?” It also must be “What are you trying to do, and should you be allowed to do it right now?”

In practice, this means:

- Understanding what data is truly needed for someone’s work. Not just what they can technically access, but what aligns with their actual responsibilities.

- Watching for unusual access patterns, even inside trusted accounts. A finance analyst accessing HR documents, or a sales rep pulling engineering specifications, should trigger immediate review.

- Limiting access to sensitive data unless there is a clear, current reason. Permissions should be contextual, not permanent.

- Preventing AI tools from acting outside of legitimate business need. The system should understand not just what data exists, but what makes sense for this user, in this moment, for this task.

AI is not a threat in itself. The real risk comes from giving it broad access with no ongoing check on how that access is used.

The challenge is clear: add control without slowing people down. No one will accept constant prompts or blocked queries. Controls should stay out of the way when access is valid and step in only when it’s not.

The Ray Security Approach

At Ray Security, we believe access should never be a one-time “yes.” It should adapt to real activity in real time.

Here’s how this works in practice: When that junior employee asks about salary ranges, the system does not simply check whether they have technical access to payroll data. It evaluates whether accessing payroll information aligns with their role, their current projects, and their typical behavior patterns. If it does not match, the request is blocked before the AI ever queries the data, and the security team is alerted to review.

The key difference from traditional DLP or access control is timing and intelligence. We do not wait to scan content after it has been retrieved. We evaluate the intent and context of the request itself, in real time, before data moves. And we do this without requiring users to navigate permission workflows or wait for approvals when their access is legitimate.

This approach protects against accidental internal exposure while still allowing employees to benefit from AI. Users access what they truly need, and only when they need it. Everything else stays out of reach, automatically.

Why This Matters

AI has made work simpler. But it has also made access easier than ever. Organizations cannot rely on old assumptions about trust and roles. They need security that understands how data is used and reacts the moment access no longer makes sense.

The challenge is making sure the speed of AI does not outrun common sense controls. As organizations move from AI pilots to production deployments, these scenarios will only become more common. The time to address them is now, before a well-meaning question creates a lasting problem.